Publications

2025

-

Proactive Hearing Assistants that Isolate Egocentric ConversationsGuilin Hu, Malek Itani, Tuochao Chen, and 1 more authorIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, Nov 2025

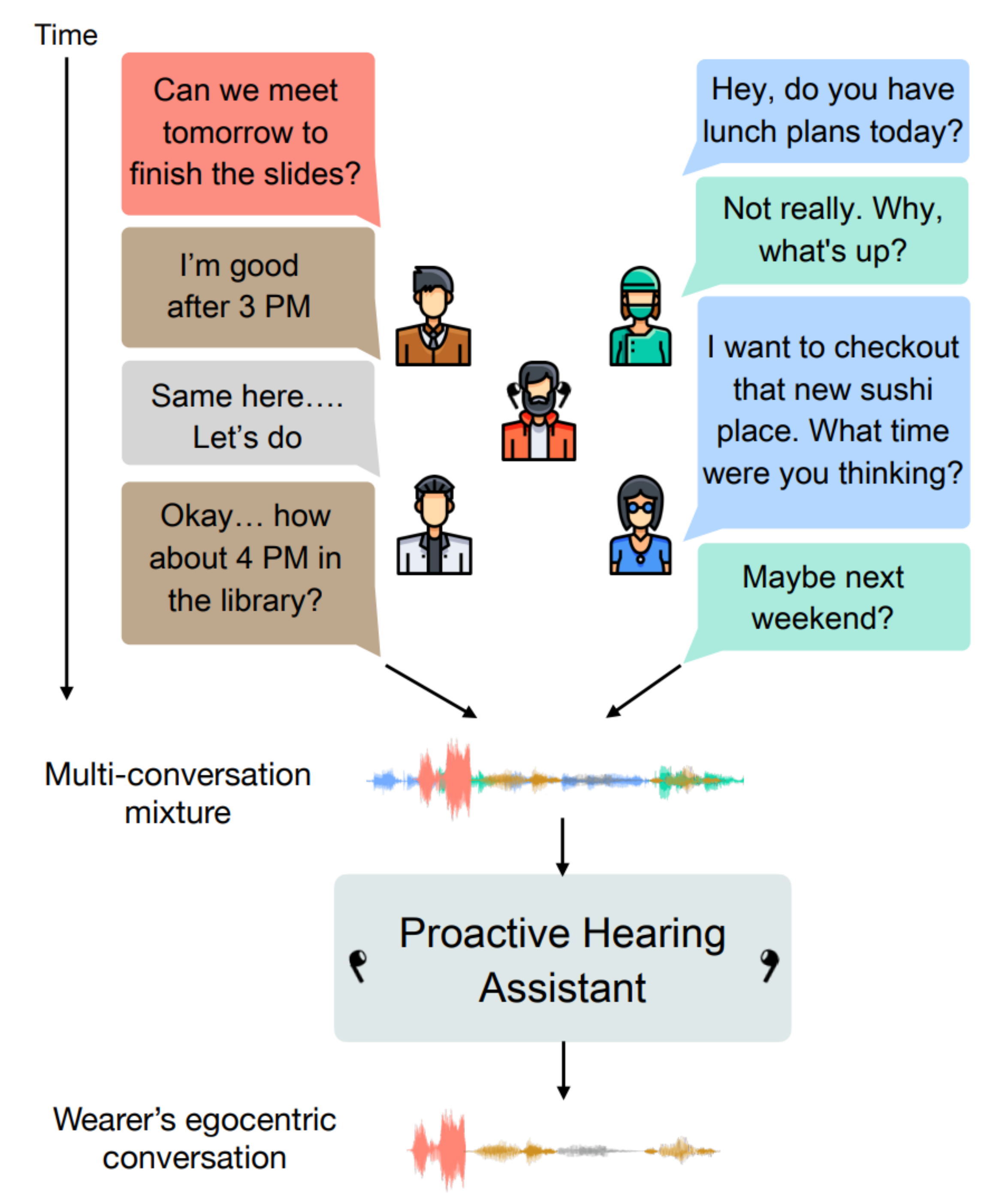

Proactive Hearing Assistants that Isolate Egocentric ConversationsGuilin Hu, Malek Itani, Tuochao Chen, and 1 more authorIn Proceedings of the 2025 Conference on Empirical Methods in Natural Language Processing, Nov 2025We introduce proactive hearing assistants that automatically identify and separate the wearer’s conversation partners, without requiring explicit prompts. Our system operates on egocentric binaural audio and uses the wearer’s self-speech as an anchor, leveraging turn-taking behavior and dialogue dynamics to infer conversational partners and suppress others. To enable real-time, on-device operation, we propose a dual-model architecture: a lightweight streaming model runs every 12.5 ms for low-latency extraction of the conversation partners, while a slower model runs less frequently to capture longer-range conversational dynamics. Results on real-world 2- and 3-speaker conversation test sets, collected with binaural egocentric hardware from 11 participants totaling 6.8 hours, show generalization in identifying and isolating conversational partners in multi-conversation settings. Our work marks a step toward hearing assistants that adapt proactively to conversational dynamics and engagement.

-

Wireless Hearables With Programmable Speech AI AcceleratorsMalek Itani, Tuochao Chen, Arun Raghavan, and 2 more authorsNov 2025

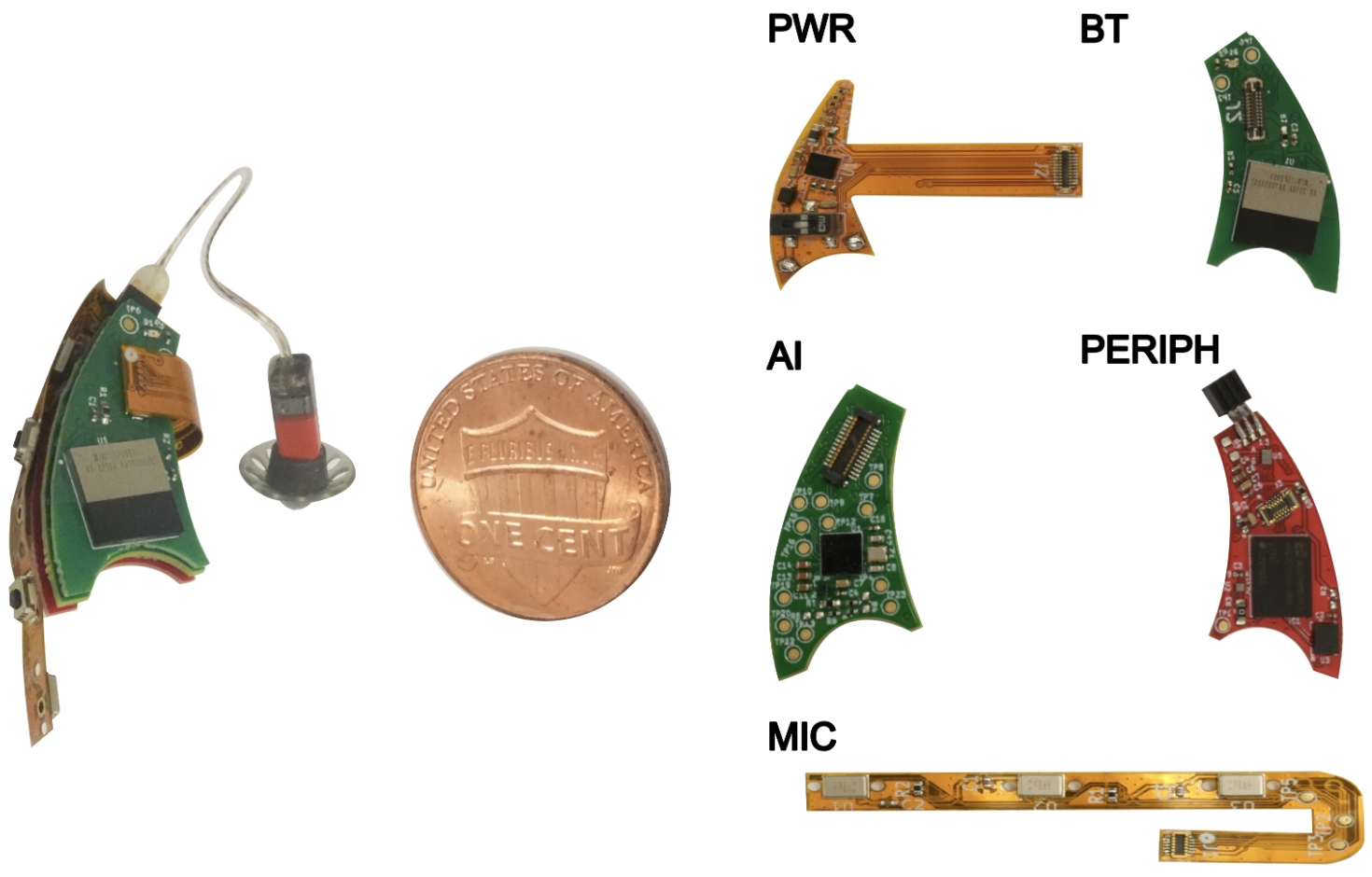

Wireless Hearables With Programmable Speech AI AcceleratorsMalek Itani, Tuochao Chen, Arun Raghavan, and 2 more authorsNov 2025The conventional wisdom has been that designing ultra-compact, battery-constrained wireless hearables with on-device speech AI models is challenging due to the high computational demands of streaming deep learning models. Speech AI models require continuous, real-time audio processing, imposing strict computational and I/O constraints. We present NeuralAids, a fully on-device speech AI system for wireless hearables, enabling real-time speech enhancement and denoising on compact, battery-constrained devices. Our system bridges the gap between state-of-the-art deep learning for speech enhancement and low-power AI hardware by making three key technical contributions: 1) a wireless hearable platform integrating a speech AI accelerator for efficient on-device streaming inference, 2) an optimized dual-path neural network designed for low-latency, high-quality speech enhancement, and 3) a hardware-software co-design that uses mixed-precision quantization and quantization-aware training to achieve real-time performance under strict power constraints. Our system processes 6 ms audio chunks in real-time, achieving an inference time of 5.54 ms while consuming 71.6 mW. In real-world evaluations, including a user study with 28 participants, our system outperforms prior on-device models in speech quality and noise suppression, paving the way for next-generation intelligent wireless hearables that can enhance hearing entirely on-device.

-

TF-MLPNet: Tiny Real-Time Neural Speech SeparationMalek Itani, Tuochao Chen, and Shyamnath GollakotaNov 2025

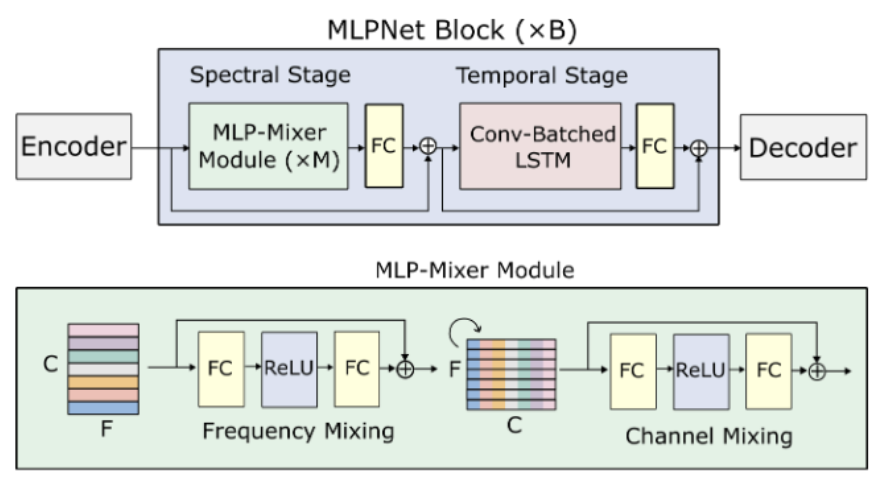

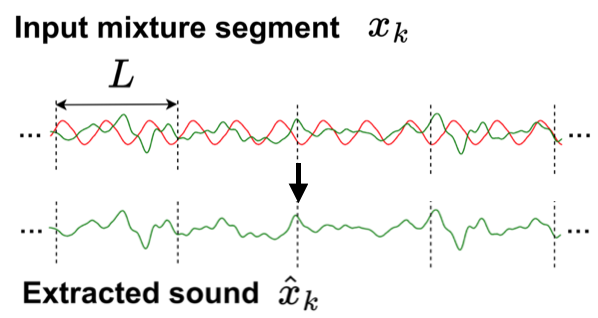

TF-MLPNet: Tiny Real-Time Neural Speech SeparationMalek Itani, Tuochao Chen, and Shyamnath GollakotaNov 2025Speech separation on hearable devices can enable transformative augmented and enhanced hearing capabilities. However, state-of-the-art speech separation networks cannot run in real-time on tiny, low-power neural accelerators designed for hearables, due to their limited compute capabilities. We present TF-MLPNet, the first speech separation network capable of running in real-time on such low-power accelerators while outperforming existing streaming models for blind speech separation and target speech extraction. Our network operates in the time-frequency domain, processing frequency sequences with stacks of fully connected layers that alternate along the channel and frequency dimensions, and independently processing the time sequence at each frequency bin using convolutional layers. Results show that our mixed-precision quantization-aware trained (QAT) model can process 6 ms audio chunks in real-time on the GAP9 processor, achieving a 3.5-4x runtime reduction compared to prior speech separation models.

-

Neural Speech Extraction with Human FeedbackMalek Itani, Ashton Graves, Sefik Emre Eskimez, and 1 more authorIn Interspeech, Nov 2025

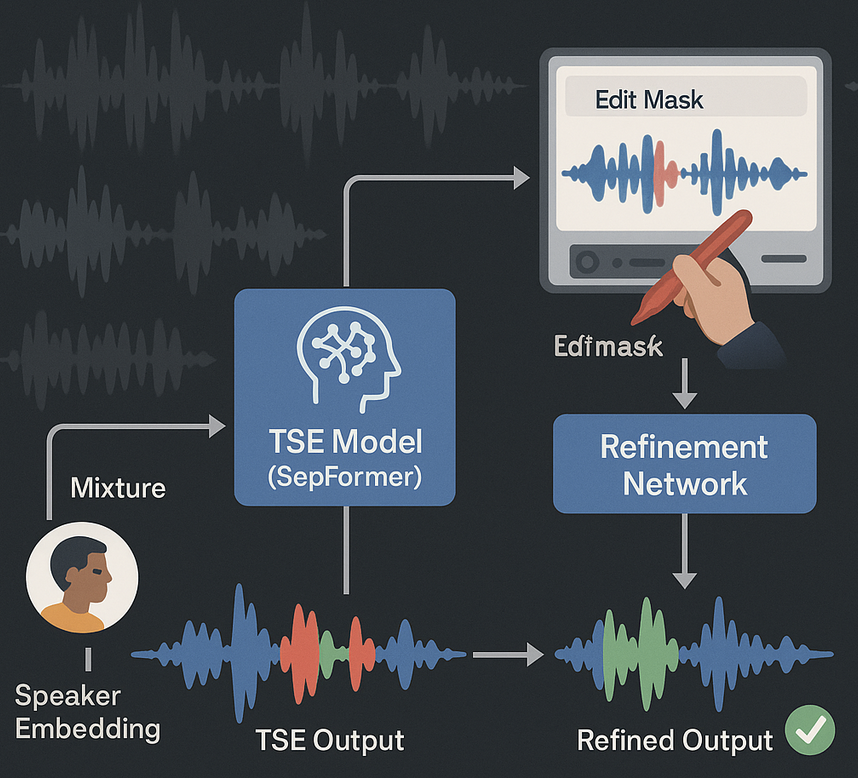

Neural Speech Extraction with Human FeedbackMalek Itani, Ashton Graves, Sefik Emre Eskimez, and 1 more authorIn Interspeech, Nov 2025We present the first neural target speech extraction (TSE) system that uses human feedback for iterative refinement. Our approach allows users to mark specific segments of the TSE output, generating an edit mask. The refinement system then improves the marked sections while preserving unmarked regions. Since large-scale datasets of human-marked errors are difficult to collect, we generate synthetic datasets using various automated masking functions and train models on each. Evaluations show that models trained with noise power-based masking (in dBFS) and probabilistic thresholding perform best, aligning with human annotations. In a study with 22 participants, users showed a preference for refined outputs over baseline TSE. Our findings demonstrate that human-in-the-loop refinement is a promising approach for improving the performance of neural speech extraction.

2024

-

Hearable devices with sound bubblesTuochao Chen, Malek Itani, Sefik Emre Eskimez, and 2 more authorsNature Electronics, Nov 2024

Hearable devices with sound bubblesTuochao Chen, Malek Itani, Sefik Emre Eskimez, and 2 more authorsNature Electronics, Nov 2024The human auditory system has a limited ability to perceive distance and distinguish speakers in crowded settings. A headset technology that can create a sound bubble in which all speakers within the bubble are audible but speakers and noise outside the bubble are suppressed could augment human hearing. However, developing such technology is challenging. Here, we report an intelligent headset system capable of creating sound bubbles. The system is based on real-time neural networks that use acoustic data from up to six microphones integrated into noise-cancelling headsets and are run on the device, processing 8 ms audio chunks in 6.36 ms on an embedded central processing unit. Our neural networks can generate sound bubbles with programmable radii between 1 m and 2 m, and with output signals that reduce the intensity of sounds outside the bubble by 49 dB. With previously unseen environments and wearers, our system can focus on up to two speakers within the bubble, with one to two interfering speakers and noise outside the bubble.

-

Target conversation extraction: Source separation using turn-taking dynamicsTuochao Chen, Qirui Wang, Bohan Wu, and 4 more authorsIn Interspeech, Nov 2024

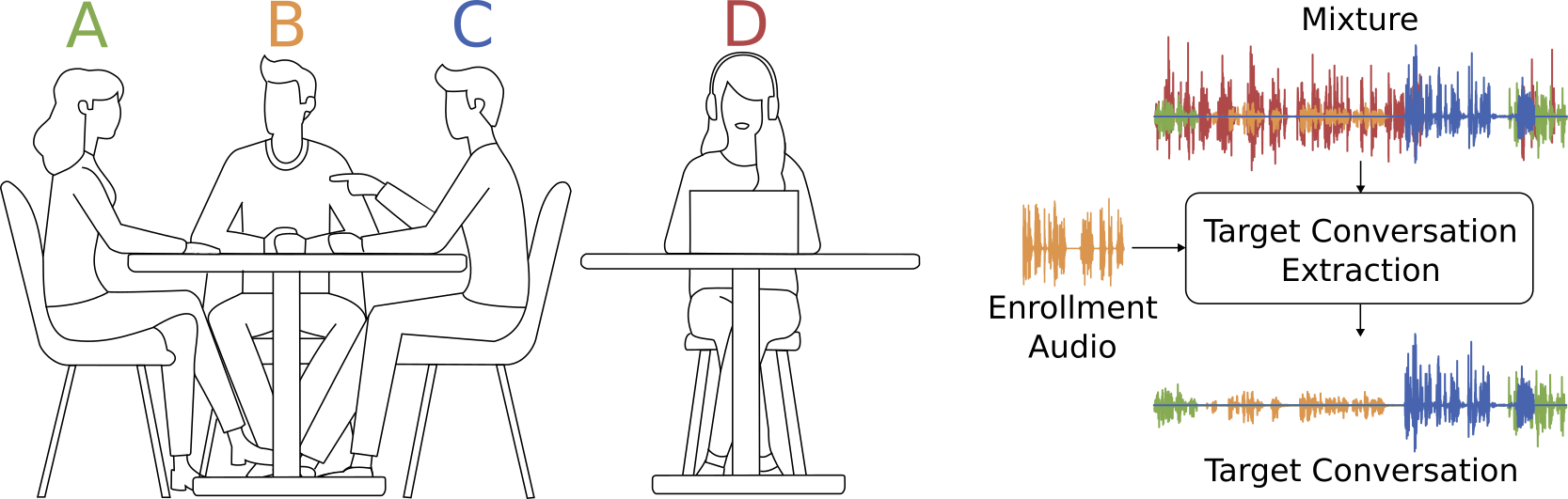

Target conversation extraction: Source separation using turn-taking dynamicsTuochao Chen, Qirui Wang, Bohan Wu, and 4 more authorsIn Interspeech, Nov 2024Extracting the speech of participants in a conversation amidst interfering speakers and noise presents a challenging problem. In this paper, we introduce the novel task of target conversation extraction, where the goal is to extract the audio of a target conversation based on the speaker embedding of one of its participants. To accomplish this, we propose leveraging temporal patterns inherent in human conversations, particularly turn-taking dynamics, which uniquely characterize speakers engaged in conversation and distinguish them from interfering speakers and noise. Using neural networks, we show the feasibility of our approach on English and Mandarin conversation datasets. In the presence of interfering speakers, our results show an 8.19 dB improvement in signal-to-noise ratio for 2-speaker conversations and a 7.92 dB improvement for 2-4-speaker conversations. Code, dataset available at this https URL.

-

Knowledge boosting during low-latency inferenceVidya Srinivas, Malek Itani, Tuochao Chen, and 3 more authorsIn Interspeech, Nov 2024

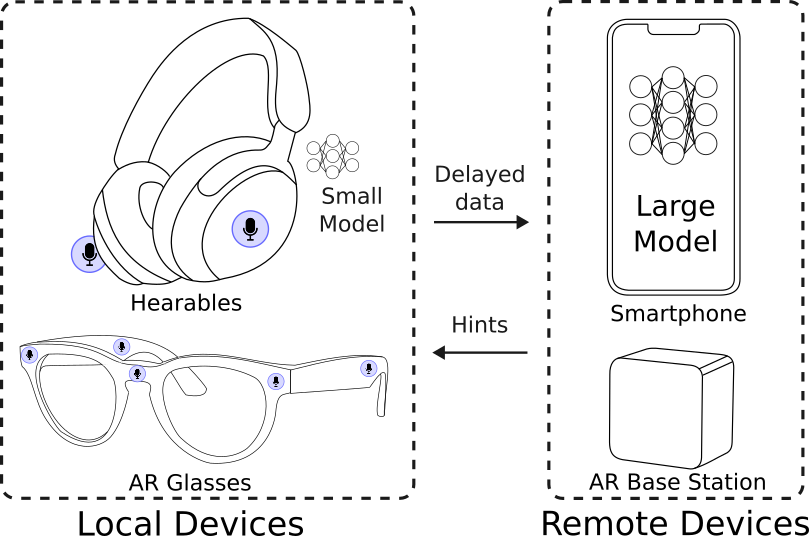

Knowledge boosting during low-latency inferenceVidya Srinivas, Malek Itani, Tuochao Chen, and 3 more authorsIn Interspeech, Nov 2024Models for low-latency, streaming applications could benefit from the knowledge capacity of larger models, but edge devices cannot run these models due to resource constraints. A possible solution is to transfer hints during inference from a large model running remotely to a small model running on-device. However, this incurs a communication delay that breaks real-time requirements and does not guarantee that both models will operate on the same data at the same time. We propose knowledge boosting, a novel technique that allows a large model to operate on time-delayed input during inference, while still boosting small model performance. Using a streaming neural network that processes 8 ms chunks, we evaluate different speech separation and enhancement tasks with communication delays of up to six chunks or 48 ms. Our results show larger gains where the performance gap between the small and large models is wide, demonstrating a promising method for large-small model collaboration for low-latency applications. Code, dataset, and audio samples available at this https URL.

-

Look Once to Hear: Target Speech Hearing with Noisy ExamplesBandhav Veluri, Malek Itani, Tuochao Chen, and 2 more authorsIn Proceedings of the CHI Conference on Human Factors in Computing Systems, Nov 2024

Look Once to Hear: Target Speech Hearing with Noisy ExamplesBandhav Veluri, Malek Itani, Tuochao Chen, and 2 more authorsIn Proceedings of the CHI Conference on Human Factors in Computing Systems, Nov 2024In crowded settings, the human brain can focus on speech from a target speaker, given prior knowledge of how they sound. We introduce a novel intelligent hearable system that achieves this capability, enabling target speech hearing to ignore all interfering speech and noise, but the target speaker. A naı̈ve approach is to require a clean speech example to enroll the target speaker. This is however not well aligned with the hearable application domain since obtaining a clean example is challenging in real world scenarios, creating a unique user interface problem. We present the first enrollment interface where the wearer looks at the target speaker for a few seconds to capture a single, short, highly noisy, binaural example of the target speaker. This noisy example is used for enrollment and subsequent speech extraction in the presence of interfering speakers and noise. Our system achieves a signal quality improvement of 7.01 dB using less than 5 seconds of noisy enrollment audio and can process 8 ms of audio chunks in 6.24 ms on an embedded CPU. Our user studies demonstrate generalization to real-world static and mobile speakers in previously unseen indoor and outdoor multipath environments. Finally, our enrollment interface for noisy examples does not cause performance degradation compared to clean examples, while being convenient and user-friendly. Taking a step back, this paper takes an important step towards enhancing the human auditory perception with artificial intelligence.

2023

-

Semantic Hearing: Programming Acoustic Scenes with Binaural HearablesBandhav Veluri, Malek Itani, Justin Chan, and 2 more authorsIn Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, Nov 2023

Semantic Hearing: Programming Acoustic Scenes with Binaural HearablesBandhav Veluri, Malek Itani, Justin Chan, and 2 more authorsIn Proceedings of the 36th Annual ACM Symposium on User Interface Software and Technology, Nov 2023Imagine being able to listen to the birds chirping in a park without hearing the chatter from other hikers, or being able to block out traffic noise on a busy street while still being able to hear emergency sirens and car honks. We introduce semantic hearing, a novel capability for hearable devices that enables them to, in real-time, focus on, or ignore, specific sounds from real-world environments, while also preserving the spatial cues. To achieve this, we make two technical contributions: 1) we present the first neural network that can achieve binaural target sound extraction in the presence of interfering sounds and background noise, and 2) we design a training methodology that allows our system to generalize to real-world use. Results show that our system can operate with 20 sound classes and that our transformer-based network has a runtime of 6.56 ms on a connected smartphone. In-the-wild evaluation with participants in previously unseen indoor and outdoor scenarios shows that our proof-of-concept system can extract the target sounds and generalize to preserve the spatial cues in its binaural output. Project page with code: https://semantichearing.cs.washington.edu

-

Creating speech zones with self-distributing acoustic swarmsMalek Itani, Tuochao Chen, Takuya Yoshioka, and 1 more authorNature Communications, Sep 2023

Creating speech zones with self-distributing acoustic swarmsMalek Itani, Tuochao Chen, Takuya Yoshioka, and 1 more authorNature Communications, Sep 2023Imagine being in a crowded room with a cacophony of speakers and having the ability to focus on or remove speech from a specific 2D region. This would require understanding and manipulating an acoustic scene, isolating each speaker, and associating a 2D spatial context with each constituent speech. However, separating speech from a large number of concurrent speakers in a room into individual streams and identifying their precise 2D locations is challenging, even for the human brain. Here, we present the first acoustic swarm that demonstrates cooperative navigation with centimeter-resolution using sound, eliminating the need for cameras or external infrastructure. Our acoustic swarm forms a self-distributing wireless microphone array, which, along with our attention-based neural network framework, lets us separate and localize concurrent human speakers in the 2D space, enabling speech zones. Our evaluations showed that the acoustic swarm could localize and separate 3-5 concurrent speech sources in real-world unseen reverberant environments with median and 90-percentile 2D errors of 15 cm and 50 cm, respectively. Our system enables applications like mute zones (parts of the room where sounds are muted), active zones (regions where sounds are captured), multi-conversation separation and location-aware interaction.

-

Wireless Earbuds for Low-Cost Hearing ScreeningJustin Chan, Antonio Glenn, Malek Itani, and 5 more authorsIn Proceedings of the 21st Annual International Conference on Mobile Systems, Applications and Services, Jun 2023

Wireless Earbuds for Low-Cost Hearing ScreeningJustin Chan, Antonio Glenn, Malek Itani, and 5 more authorsIn Proceedings of the 21st Annual International Conference on Mobile Systems, Applications and Services, Jun 2023We present the first wireless earbud hardware that can perform hearing screening by detecting otoacoustic emissions. The conventional wisdom has been that detecting otoacoustic emissions, which are the faint sounds generated by the cochlea, requires sensitive and expensive acoustic hardware. Thus, medical devices for hearing screening cost thousands of dollars and are inaccessible in low and middle income countries. We show that by designing wireless ear-buds using low-cost acoustic hardware and combining them with wireless sensing algorithms, we can reliably identify otoacoustic emissions and perform hearing screening. Our algorithms combine frequency modulated chirps with wideband pulses emitted from a low-cost speaker to reliably separate otoacoustic emissions from in-ear reflections and echoes. We conducted a clinical study with 50 ears across two healthcare sites. Our study shows that the low-cost earbuds detect hearing loss with 100% sensitivity and 89.7% specificity, which is comparable to the performance of a $8000 medical device. By developing low-cost and open-source wearable technology, our work may help address global health inequities in hearing screening by democratizing these medical devices.Open-source hardware and code can be found here: https://github.com/uw-x/OAEbuds

-

Real-Time Target Sound ExtractionBandhav Veluri, Justin Chan, Malek Itani, and 3 more authorsIn ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Jun 2023

Real-Time Target Sound ExtractionBandhav Veluri, Justin Chan, Malek Itani, and 3 more authorsIn ICASSP 2023-2023 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP), Jun 2023We present the first neural network model to achieve real-time and streaming target sound extraction. To accomplish this, we propose Waveformer, an encoder-decoder architecture with a stack of dilated causal convolution layers as the encoder, and a transformer decoder layer as the decoder. This hybrid architecture uses dilated causal convolutions for processing large receptive fields in a computationally efficient manner, while also leveraging the generalization performance of transformer-based architectures. Our evaluations show as much as 2.2–3.3 dB improvement in SI-SNRi compared to the prior models for this task while having a 1.2–4x smaller model size and a 1.5–2x lower runtime. We provide code, dataset, and audio samples: https://waveformer.cs.washington.edu/.